Overview

The Lucia Supercomputer is a high-performance computing system designed for diverse computational workloads. Its architecture comprises the following key components:

- Compute: A variety of nodes, including CPU, GPU, and specialized nodes, tailored for tasks such as memory-intensive computations, AI processing, and visualization.

- Storage: A robust IBM Spectrum Scale parallel filesystem with approximately 3 PiB of storage, supported by an offsite backup system.

- Service and Management: Essential for system operations and management, these partitions are not directly accessible to end users.

All components are interconnected through an HDR InfiniBand network and a 10 Gb/s Ethernet network, ensuring high-speed communication.

Our compute infrastructure comprises 364 nodes: CPU, GPU and specialized servers powered by AMD EPYC Zen 3 Milan processors and NVidia A100 & T4 GPUs, designed to support diverse computational workloads.

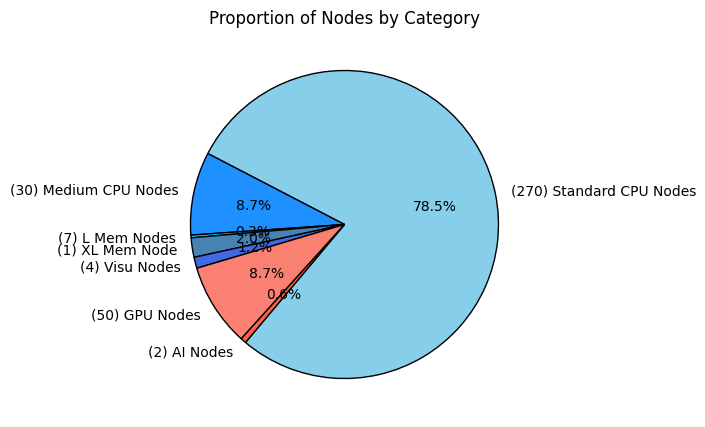

Compute Nodes Distribution

The distribution of the various types of compute nodes is depicted in the following pie chart:

-

CPU : 300 nodes

- Standard : 270 nodes

- Medium : 30 nodes

- GPU : 50 nodes

-

Specialized : 14 nodes

- Large Memory : 7 nodes

- XLarge Memory : 1 node

- AI : 2 nodes

- Visualization : 4 nodes

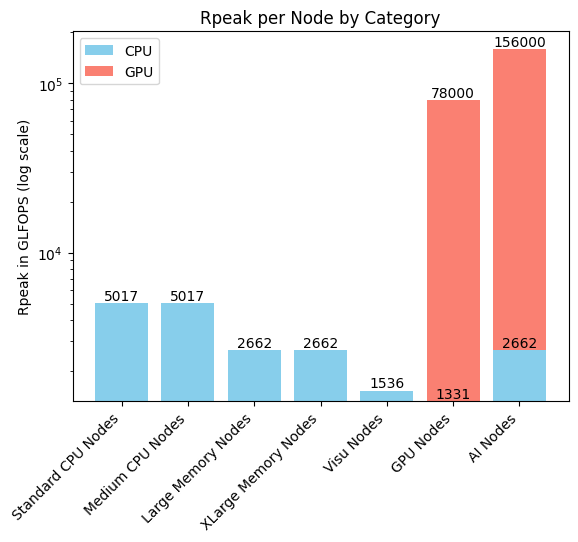

Theoretical Performance

The Rpeak performance of the various types of compute nodes is depicted in the following bar chart:

Detailed hardware specifications for all compute nodes are available in the Hardware section.

All computes nodes are available for computations through the SLURM batch scheduler.

The storage system, based on IBM Spectrum Scale (GPFS), offers a unified, tiered solution with 3 PiB of capacity. It includes:

-

Flash Tier (200 TB): For high-speed I/O, leveraging NVMe SSDs, acts as a burst-buffer.

-

Standard Tier (2.87 PB): High-capacity storage with NL-SAS disks.

The logical partitionning is managed through IBM Spectrum Scale's "filesets", and data are migrated seemlessly between the two physical storage tiers.

Performance Benchmarks

Filesets benefiting from the burst-buffer deliver the highest performance, making it ideal for I/O-intensive workloads, while the other filesets provide balanced performance for general-purpose HPC tasks.

| Filesets | Read Speed (GB/s) | Write Speed (GB/s) | IOPS (4k Reads) |

|---|---|---|---|

| Burst-Buffer | 270 | 200 | 4–5M |

| NO Burst-Buffer | 18 | 18 | 450k |

Backup System

The backup infrastructure includes IBM TS4500 tape library with 200 tapes (20 TB each), providing 4 PB uncompressed capacity.

To learn how filesets organize user and project storage, and which ones use the burst buffer, see the Storage section.

Lucia's communication network consists of two main parts:

-

Ethernet network

The 10Gb Ethernet network is mainly used for administative communication and tasks, and also for connecting to the cluster with SSH and for user data transfers in and out of the cluster. The Ethernet network is divided in multiple subnets/VLANs for dedicated tasks such as node deployment, user access, or server/device management.

-

Infiniband network

Lucia features a high speed low latency HDR Infiniband network in a non-blocking fat-tree topology. The Infiniband network is primarily used by the compute nodes to communicate with other nodes and transfer data during jobs, and by the high performance IBM Spectrum Scale storage system as well.

- Operating system: Red Hat Linux Enterprise 8

- Job scheduler: Slurm 23.02

- Web portal: Open OnDemand

- Programming environment: Cray PE 22.09

- Main software installation framework: EasyBuild 4.9.0

To get started with LUCIA’s software stack and installed scientific tools, see the Software section.